INTRODUCTION

As part of the 4th year mechatronics engineering design project, our team is aiming to develop a navigation system for an autonomous unmanned aerial vehicles (UAVs, using a combination of both vision and sonar system.The overall system should be able to guide an UAV to recognize certain geographic features and identify its location relative to the identified feature, while taking a safe path of flight to avoid in-air collisions. The system should be very valuable in conditions where there is need for an UAV that is capable of direct/active interaction with its environment in closed spaces and dangerous environments such as in disaster reliefs.

Our team is currently working as a part of University of Waterloo Micro-Aerial Vehicles (UWMAV) Team, and the team is going to be primarily responsible for developing algorithms and sensor packages for UAV's feature detection, mapping and flight path planning. For more information on the UWMAV team, please visit the UWMAV's website.

Project Progress tracker

The 2D mapping from camera images is now enabled

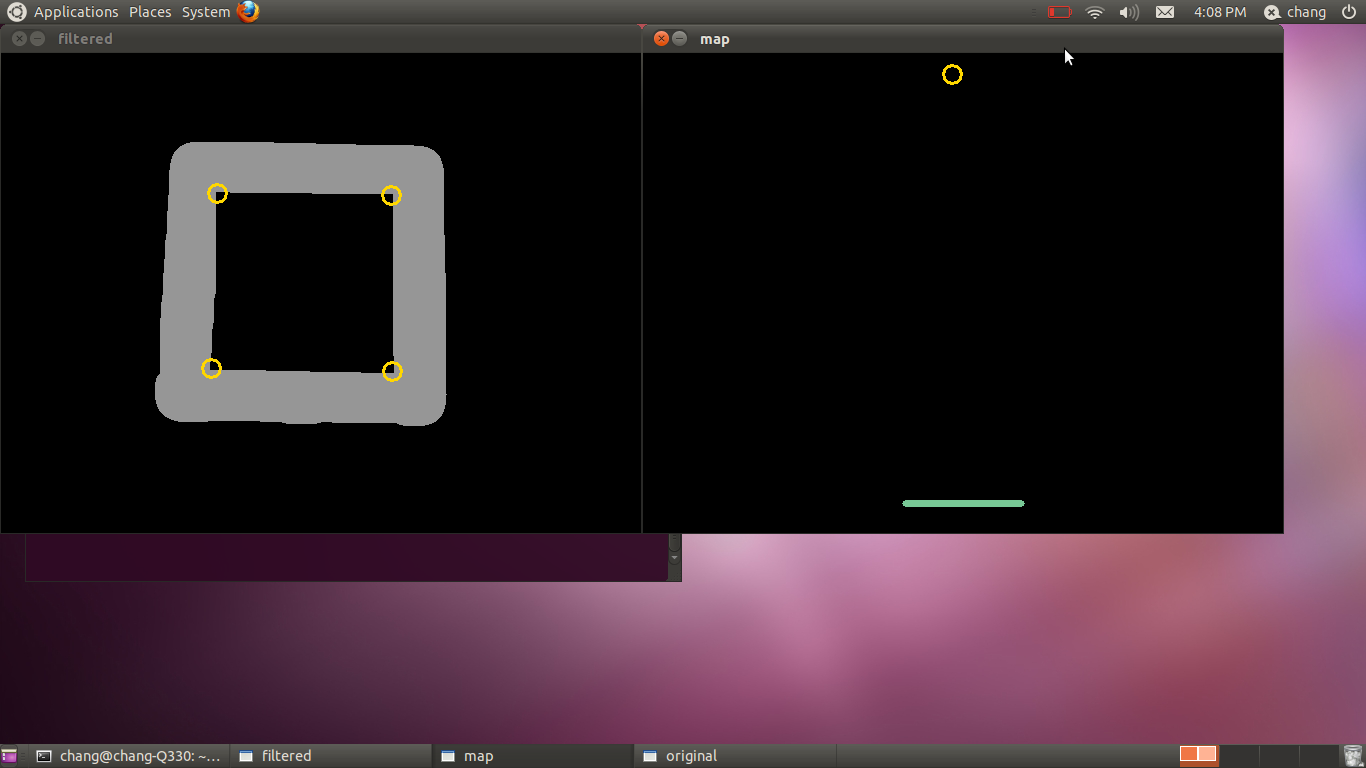

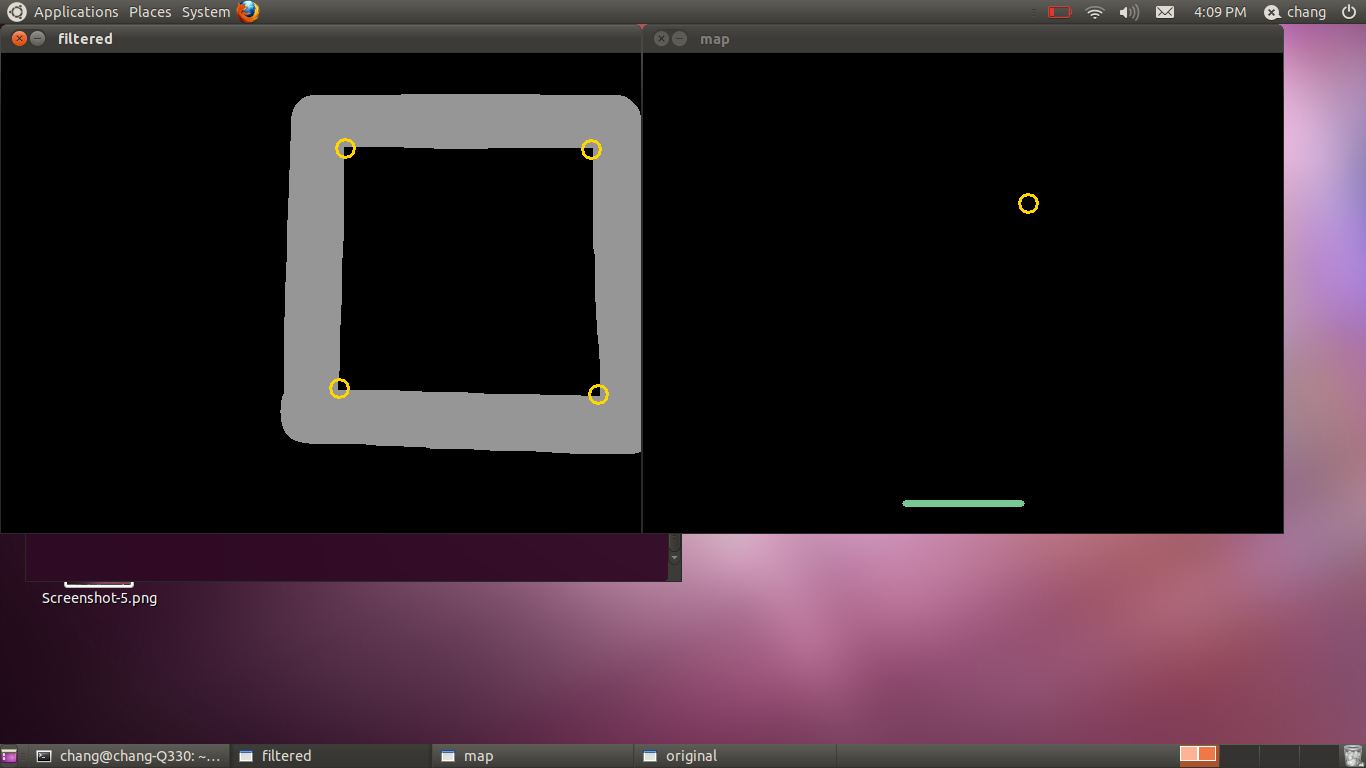

We have added a code which enables the camera to take the image of the window, detect the four corners of the window, and then calculate and display the location of the camera relative to the window. The algorithm for this segment of code is relatively simple, using the principles of similar triagles in a projection.

Right now, this 2D mapping function is only limited to showing the location of the camera only; not its direction of facing. But we are looking to further improve this mapping algorithm so that it will also display the orientation of camera relative to the window.

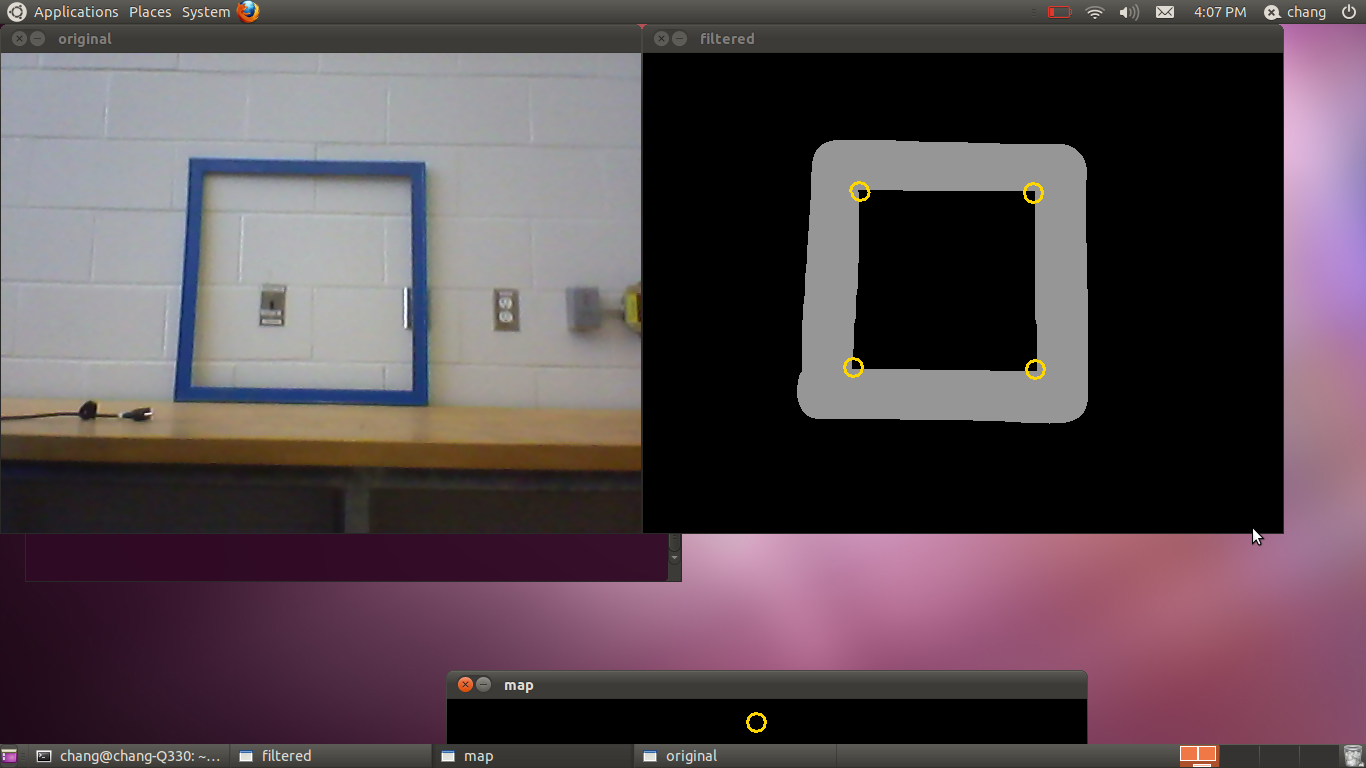

Test Case #1:

Window at the centre of image taken from a far distance

Figure 2-1. The filetered image for the blue-frame window and its four detected corners

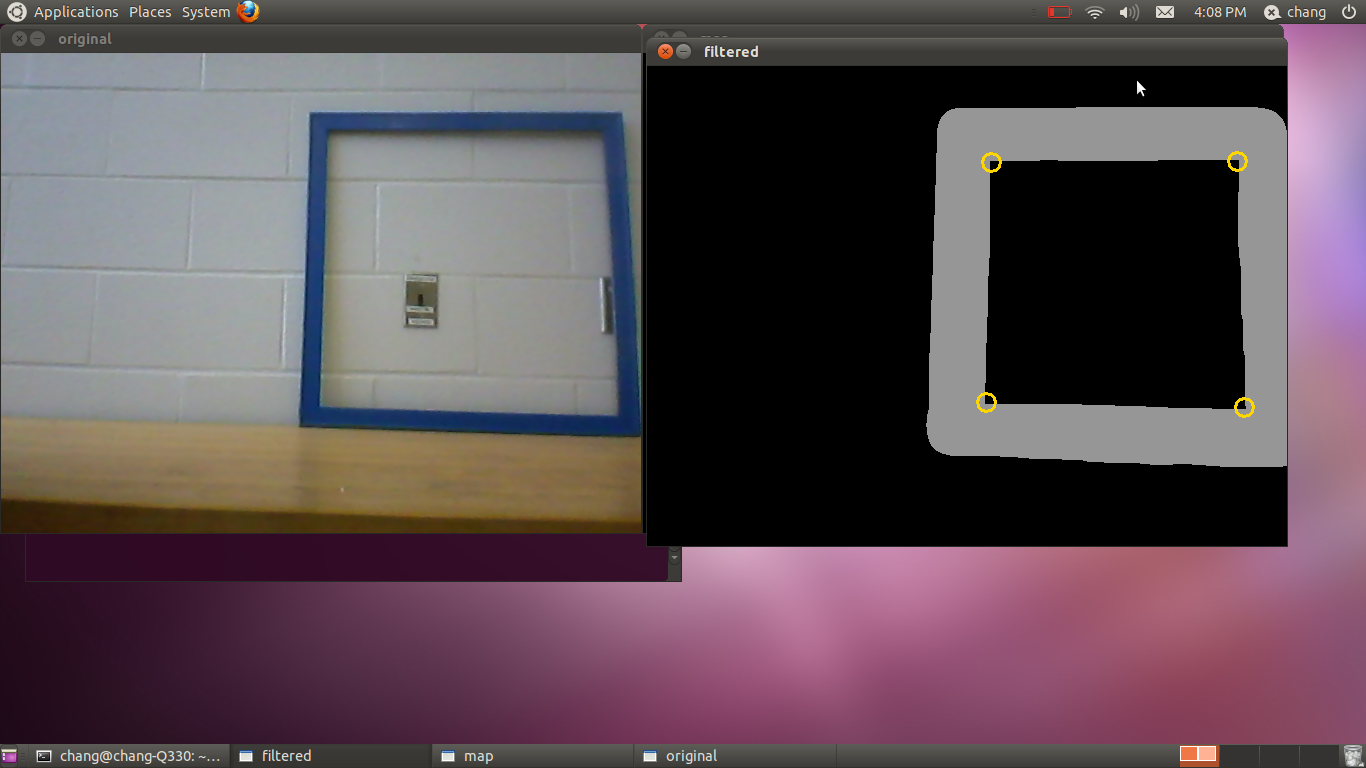

Test Case #2:

Window at the right side of image taken from a closer distance

Figure 2-3. The filetered image for the blue-frame window and its four detected corners

01.27.2012

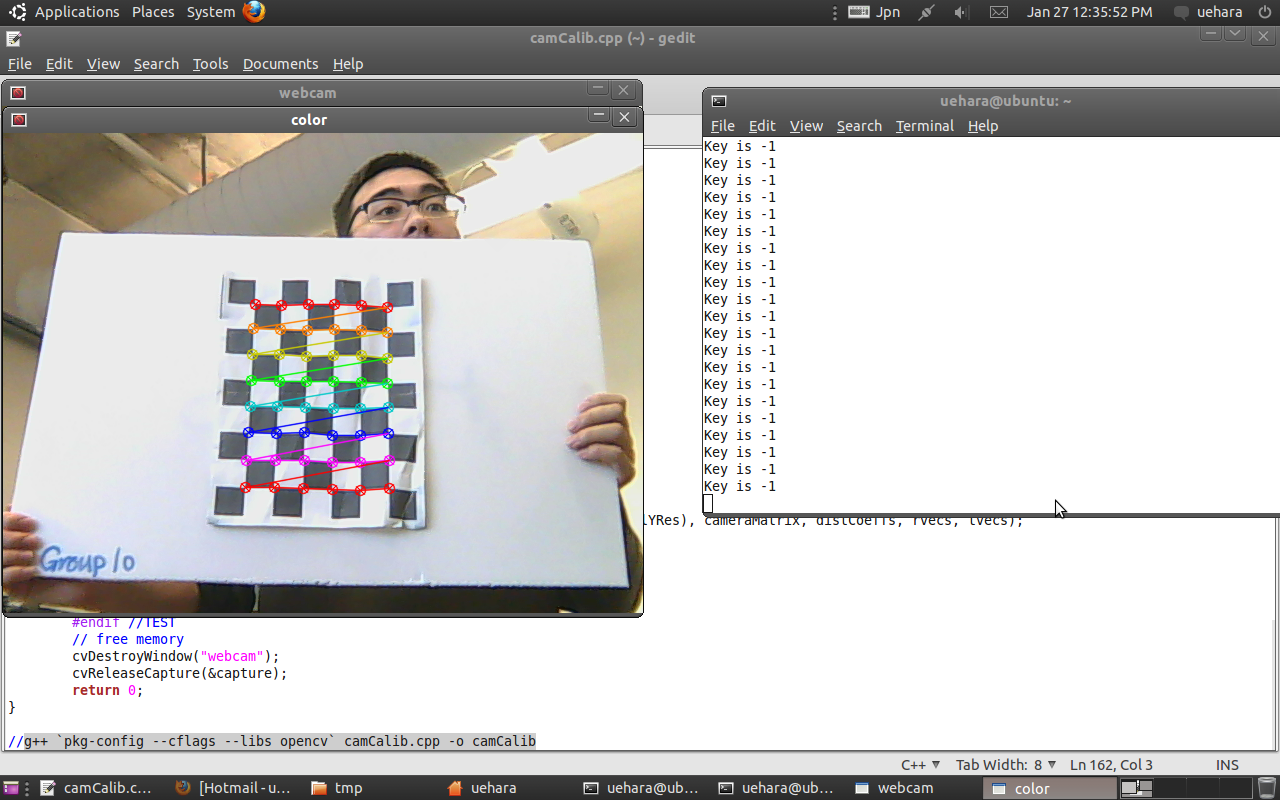

The camera calibration is now complete

The group had

calibrated the webcam and has now obtained the projection matrix for

the camera. This camera projection matrix is later expected to be used

in calculating the location of the camera (x,y,z) relative to the

window.

Camera Projection

Matrix Obtained

470.3568 0.000000 154.6004

0.000000

0.000000 472.9332 88.05615

0.000000

0.000000 0.000000 1.000000

0.000000

01.21.2012

The project team's website is now set-up and running

As an effort

to better communicate and inform the general public about who we are

and what we are doing, the team now has set up its own website. From

now on, we will post any news or updates on our project and let you how

we are doing.